|

SqueezeBrains SDK 1.18

|

|

SqueezeBrains SDK 1.18

|

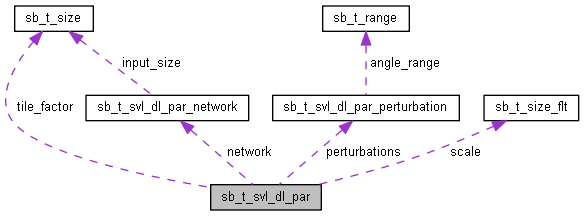

SVL parameters to configure the Deep Learning training. More...

#include <sb.h>

Data Fields | |

| sb_t_svl_dl_par_network | network |

| Network parameters. More... | |

| char | pre_training_file [512] |

| Network pre-training file path with extension SB_PRE_TRAINING_EXT. More... | |

| int | load_pre_training_models |

| Load models parameters from pre-training file. More... | |

| sb_t_svl_dl_par_perturbation | perturbations |

| Perturbations for deep learning training. More... | |

| float | learning_rate |

| Learning rate. More... | |

| int | num_epochs |

| Number of epochs. More... | |

| int | batch_size |

| Size of the batch used during SVL. More... | |

| float | validation_percentage |

| Validation percentage. More... | |

| int | save_best |

| At the end of the training, the best internal parameters configuration is recovered. More... | |

| sb_t_loss_fn_type | loss_fn |

| Loss function. More... | |

| sb_t_svl_dl_tiling_par | tiling_par_width |

| Width tiling configuration. | |

| sb_t_svl_dl_tiling_par | tiling_par_height |

| Height tiling configuration. | |

| sb_t_image_borders_extension_mode | borders_extension_mode |

| Image borders extension mode. More... | |

SVL parameters to configure the Deep Learning training.

Used only by Deep Cortex and Deep Surface projects.

| int sb_t_svl_dl_par::batch_size |

Size of the batch used during SVL.

The number of data to be processed before an update of the network weights.

The size of the batch must be a powers of 2, more than or equal to SB_SVL_DL_BATCH_SIZE_MIN and less than or equal to SB_SVL_DL_BATCH_SIZE_MAX .

Higher batch size values on computational device with limited memory resources may cause SB_ERR_DL_CUDA_OUT_OF_MEMORY .

There is no general rule to determine the optimal batch size. However usual values are in range from 4 to 256, depending on the number of images in training dataset.

Usually Deep Cortex projects require a batch value greater than the Deep Surface projects.

| sb_t_image_borders_extension_mode sb_t_svl_dl_par::borders_extension_mode |

Image borders extension mode.

Mode to use to extend image borders during image pre-processing.

| float sb_t_svl_dl_par::learning_rate |

Learning rate.

It represents the step size at each iteration while moving toward a minimum of a loss function. When setting a learning rate, there is a trade-off between the rate of convergence and the overfitting. Setting to small learning rate values may lead to overfitting.

Values range from SB_SVL_DL_LEARNING_RATE_MIN to SB_SVL_DL_LEARNING_RATE_MAX .

| int sb_t_svl_dl_par::load_pre_training_models |

Load models parameters from pre-training file.

If enabled the entire parameter configuration saved in the pre-training file is loaded for the current SVL, including the parameters necessary for models discrimination. This option is recommended when the learning contexts of pre-training file and the current training task are very similar, i.e. they share the same models (with the same order!).

If the parameter is enabled and the number of models in pre-training file is different from number of models of the current task, the function sb_svl_run return SB_ERR_DL_PRE_TRAINING_FILE_NOT_VALID .

| sb_t_loss_fn_type sb_t_svl_dl_par::loss_fn |

Loss function.

Used only by Deep Surface projects.

It is the function used to compute the amount of loss/error that have to be minimized at each training step, i.e. at the end of every batch.

| sb_t_svl_dl_par_network sb_t_svl_dl_par::network |

| int sb_t_svl_dl_par::num_epochs |

| sb_t_svl_dl_par_perturbation sb_t_svl_dl_par::perturbations |

Perturbations for deep learning training.

| char sb_t_svl_dl_par::pre_training_file[512] |

Network pre-training file path with extension SB_PRE_TRAINING_EXT.

Path to the file containing a pre-training network parameters/weights configuration.

If the file exists and is valid the network will be loaded as pre-trained, i.e. network parameters are not randomly initialized before training but they start from a pre-existing configuration, otherwise SB_ERR_DL_PRE_TRAINING_FILE_NOT_FOUND and SB_ERR_DL_PRE_TRAINING_FILE_NOT_VALID are respectively returned.

The use of a pre-training network has great advantages and usually leads to better results and faster training time than a training from scratch. All this provided that pre-trained network has been properly trained and learned parameters fit well to the current vision task.

After a reset of the SVL the training restarts from pre-training.

Currently the SB SDK includes a pre-training file only for the following network type: SB_NETWORK_TYPE_EFFICIENTNET_B0, SB_NETWORK_TYPE_EFFICIENTNET_B1 and SB_NETWORK_TYPE_EFFICIENTNET_B2. The pre-trainings are the official one released by Pytorch and computed on ImageNet dataset (link at the official website: https://www.image-net.org).

User can also create his own pre-training at any time with the function sb_svl_save_pre_training .

When the parameter is an empty string means that no pre-training will be loaded before to start SVL.

| network type | default value |

|---|---|

| SB_NETWORK_TYPE_EFFICIENTNET_B0 | EfficientNet-b0.ptf |

| SB_NETWORK_TYPE_EFFICIENTNET_B1 | EfficientNet-b1.ptf |

| SB_NETWORK_TYPE_EFFICIENTNET_B2 | EfficientNet-b2.ptf |

| SB_NETWORK_TYPE_SDINET0 | empty string |

| SB_NETWORK_TYPE_ICNET0_64 | |

| SB_NETWORK_TYPE_ICNET0_128 |

| int sb_t_svl_dl_par::save_best |

At the end of the training, the best internal parameters configuration is recovered.

The best internal parameters configuration is the value of the weights at the epoch with the lowest validation loss. If training validation is disabled, the epoch with lowest training loss is selected.

0 Means disabled.

| float sb_t_svl_dl_par::validation_percentage |

Validation percentage.

Percentage of the training tiles to be used to validate the training.

The number of tiles is obtained rounding the amount to the smallest integer.

In case of incremental SVL:

In Deep Cortex projects exists only one tile per image.

The value ranges from SB_SVL_DL_VALIDATION_PERCENTAGE_MIN to SB_SVL_DL_VALIDATION_PERCENTAGE_MAX .